Learnings from implementing Multi-Agent Systems & Sequential Workflows

Part of my learning experience to understand agentic systems and how Deep-Research works

I wanted to replicate deep research pipeline to learn more about agents for use cases like automating market research reports. Instead of relying on existing frameworks like Agents SDK, I decided to implement agents myself to understand the concepts better and also have control over state and storage.

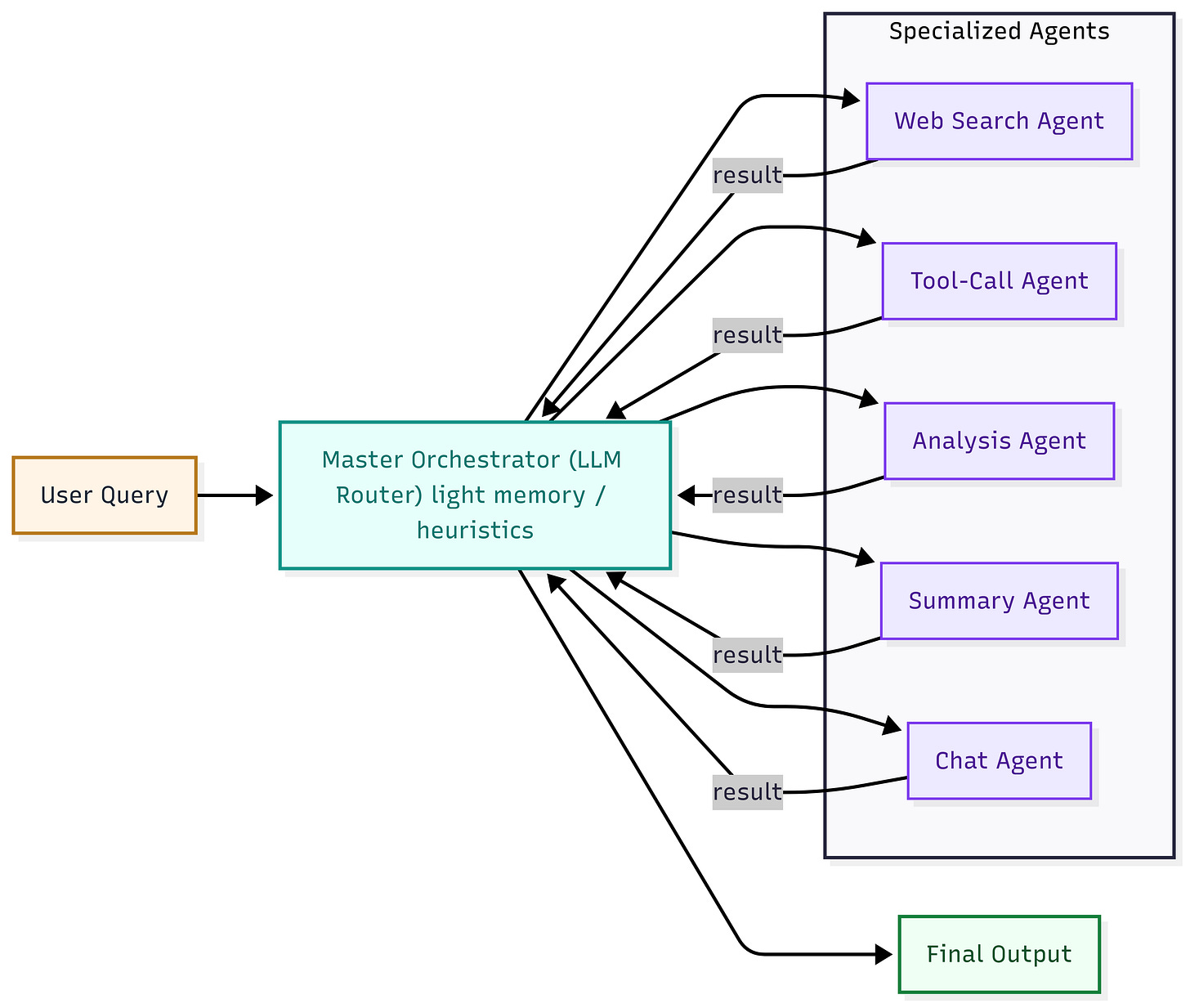

My Initial Multi-Agent Approach

I built a single master orchestrator agent that analyzed incoming messages and routed them to specialized agents for:

Web search

Tool calls

Analysis

Summary

Chat

This worked at first, but revealed some issues as the context grew:

After 5–10 messages, the orchestrator started sending queries to the chat agent instead of the analysis agent, likely due to memory handoff issues.

The tool call accuracy dropped to 50-60% after 10 messages.

It would call the web search tool 3 times for the same question

The orchestrator struggled to keep track of what it was doing, a common limitation of simple master-agent setups without structured memory.

Despite extensive prompt engineering, the fundamental issues persisted.

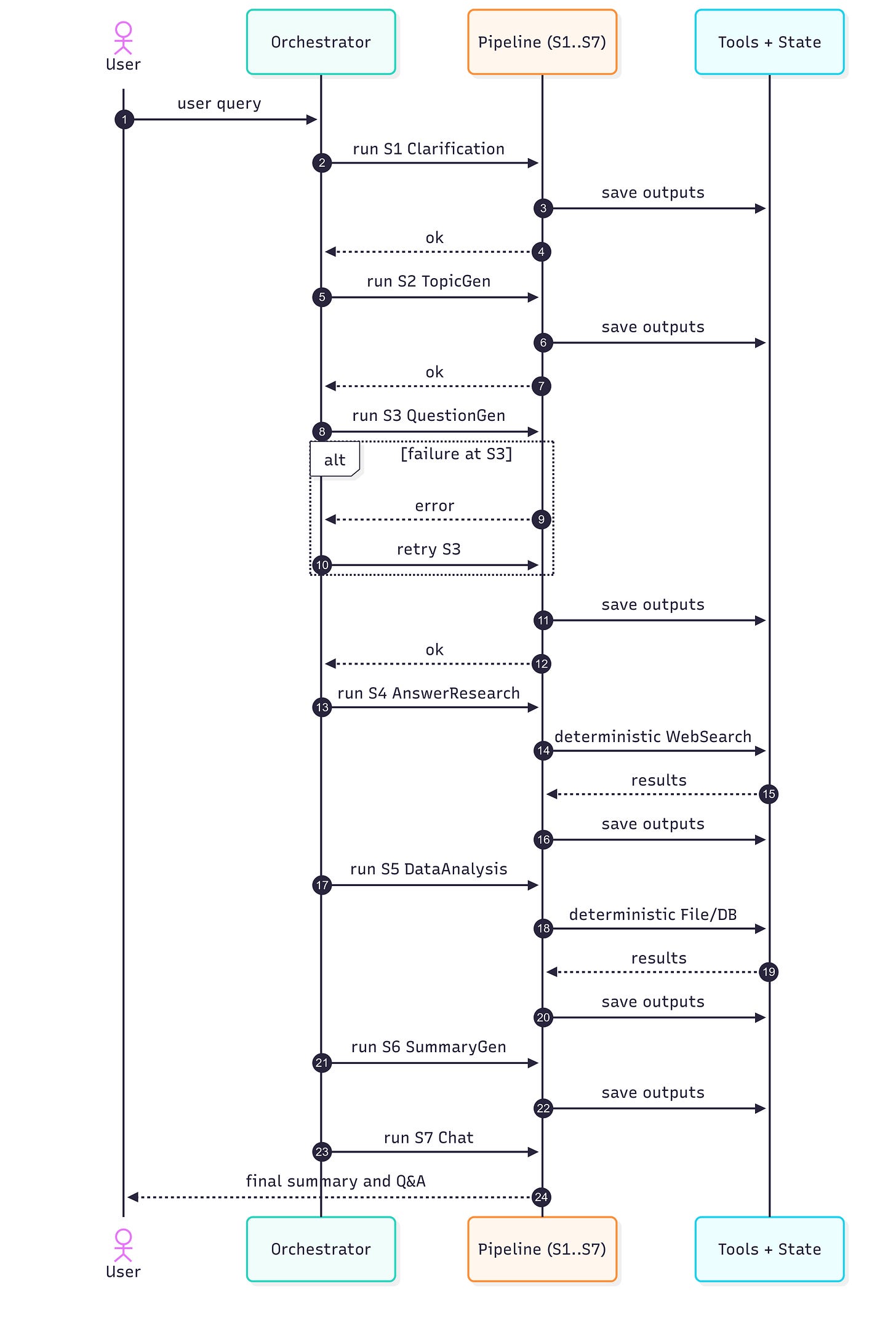

Moving to Sequential Workflows

To overcome these issues, I rebuilt the system with a deterministic, stage-based workflow:

Clarification check - asks clarifying question after assessing user request

Topic generation - generates topics to analyze based on user query

Question generation - generates 5-10 questions related to the topic for the user query

Answer research - web search enabled LLM request to answer the question

Analysis on Data - LLM request to analyze data uploaded by user in context of the query.

Summary generation - Final summary generation covering all topics

Chat - Chat on the summary and data generated during the analysis

Benefits of this architecture:

Each stage is independent and resumable (if stage 3 fails, retry just that stage), with proper handoff and state

Defined Scope reduced hallucinations — each LLM call has one specific job.

Deterministic tool use — no agent confusion about whether to use tool or not, is defined by the stage.

Key learnings for complex workflows

No method is absolutely perfect: For well-defined workflows, let code enforce orchestration, retries, and state — while agents handle judgment tasks like clarification, research, and synthesis.

For reliability, make tool calls stage driven, not agent decided. Yes, this means unnecessary web searches when entering the "research" stage, but the trade-off is worth it for predictable, reliable behavior.

Agents work better when scope is staged narrowly and state is saved, so errors are contained and retries only affect that step, not the whole workflow.

Next target: Implementing the same deep-research workflow using Agents SDK to explore structured memory, state persistence, and more reliable task handoff.

Interested in reading more content on AI systems and startup journey? Subscribe to thought.bitsnbytes.in